- The STAR system in action

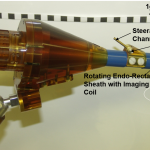

- Robotic MRI-guided prostate biopsy device

- 3D-Printed models for surgical planning

Our work focuses on both basic research and translational research in the development of novel tools, imaging, and robot control techniques for medical robotics. Specifically we investigate methodologies that (i) increase the smartness and autonomy and (ii) improve image guidance of medical robots to perform previously impossible tasks, improve efficiency, and improve patient outcomes.

Smart Surgical Systems – Increased autonomy has transformed fields such as manufacturing and aviation by drastically increasing efficiency and reducing failure rates. While pre-operative planning and automation has also improved the outcomes of surgical procedures with rigid anatomy, practical considerations have hindered progress in soft-tissue surgery mainly because of unpredictable shape changes, tissue deformations, and motions limiting the use of pre-operative planning. Our research aims to overcome these challenges through:

Robotic Tools – We are developing specialized robotic tools that eliminate the need for complex motions and reduce tissue deformations and tissue changes by incorporating the maneuverability and complex actuations in the tool tip.

Improved Surgical Sensing – We are investigating novel surgical imaging techniques to enable high-fidelity quantitative perception and tracking of soft tissue targets that are in constant motion and deformation due to patient breathing, peristalsis, and tool interactions.

Robot Control Strategies – We are developing novel robot control methods that increase the autonomy of surgical robots and effectively enhance the surgeon’s capabilities.

Image Guided Interventions and Planning – Diagnostic imaging has dramatically improved over the years, where now small tumors and defects are often detectable before affecting a patient’s health. However, in many cases imaging during intervention and surgery is limited to basic color cameras, resulting in missed tumors and sub-optimal surgical results. Our research focuses on improving image guidance and image display during planning, intervention, and surgery. This often requires specialized robots to work alongside the imaging technique and novel displays.

Magnetic Resonance Imaging (MRI) Guided Prostate Interventions – MRI has higher sensitivity in detecting prostate cancer compared to ultrasound, the current standard for image guided prostate biopsy. Prostate biopsy inside an MRI magnet, however, is difficult to perform due to material and space restrictions. We were the first to develop and deploy in the clinic an integrated robotic system for trans-rectal robotic prostate biopsy under MRI guidance.

3D Printing and Displays – Congenital heart defects (CHD) are the most common congenital defects, often require open-heart surgery, and are among the leading causes of death in newborns. Despite the rich 3D information provided by cardiac imaging, the display of this information is still largely constrained to viewing multiple contiguous 2D slices of the 3D scan, which is sub-optimal. We are developing novel methods to visualize CHD using 3D printing and 3D displays for education, procedural planning, and patient specific implant designs.

Novel Robotic Actuators – We are developing new actuation schemes for precision guidance of needles and other surgical implements that will allow for complex procedures such as Deep Anterior Lamellar Keratoplasty (DALK) to be performed faster and more precisely, using robotic methods.

Autonomous Ultrasound Robotic System – Unintentional injury or trauma is among the leading causes of death in the United States with up to 29% of pre-hospital trauma deaths attributed to uncontrolled hemorrhages. Our research focuses on developing a fully autonomous robotic system for performing ultrasound scans and analysis en route to the hospital for earlier trauma diagnosis and faster initialization of life saving care. We are using a KUKA robotic arm as our base platform, and we are developing machine learning algorithms to detect scannable skin regions, segment wounds, and localize four critical regions on the patient for autonomously performing ultrasound scans. The obtained 2D scans are subsequently analyzed to detect hemorrhages and assess their severity. Modelling and FEA analysis are also involved in this project to validate the simulated robot’s motion and scans on a custom designed patient phantom.

Flycycle Course Integration – The Flycycle is a unique virtual reality based system that integrates both upper and lower body workout through a 2 degrees-of-freedom platform; the upper body motion allows to control the platform’s roll and yaw angles, whereas a cycling system with variable resistance allows for a lower body workout. Oculus Rift is integrated with the Flycycle to achieve a virtual reality system. It is comprised of a head mount display, two touch controllers, and two sensors to translate the user’s head movement into virtual reality. We are integrating the system starting Fall 2019 into coursework to engage students in robotics and programming. Flycycle courses will be offered at three different levels of difficulty; a) as an introductory course for students from underrepresented communities like MCWIC, b) a part of an undergraduate robotics course, and c) as Capstone design projects. Students will have the chance to explore the Flycycle’s potential as an immersive exercising machine, or even a rehabilitation system, while learning to program games and perform sensors fusion at the cutting edge of the virtual reality world.